Over the last decade or so, the online world has witnessed a proliferation of user-generated and third-party content. Whether it’s dance craze videos on Instagram, product images on eBay or comedy podcasts on Spotify, this content often goes unmoderated, even though massive quantities are being uploaded on a daily basis.

However, the platforms responsible for hosting this content must follow specific compliance guidelines. For example, they must ensure that any content published by users doesn’t feature nudity or hate speech. This is something the likes of Facebook and YouTube mitigate by employing thousands of content reviewers, who are on hand to deal with the countless images and videos being published every second.

But for platforms including online marketplaces, e-commerce stores, community forums and classified sites, content moderation is equally paramount. Even so, it is usually much more difficult for them to manage and maintain due to fewer resources. Not only must members of staff be trained in the rules of their particular platform, they must also manually check for inappropriate or offensive content. They may only get to a certain amount of content which has been reported or sampled from a wider pool of content.

Another issue with relying on humans is that they can only scale and cope with so much, and given the increasing amount of content being published online, this quickly becomes impractical and unfair — constant exposure to upsetting and distressing content brings considerable psychological risks to human moderators.

So what’s the solution to a problem that’s only going to get worse?

Using AI and ML to automate content moderation

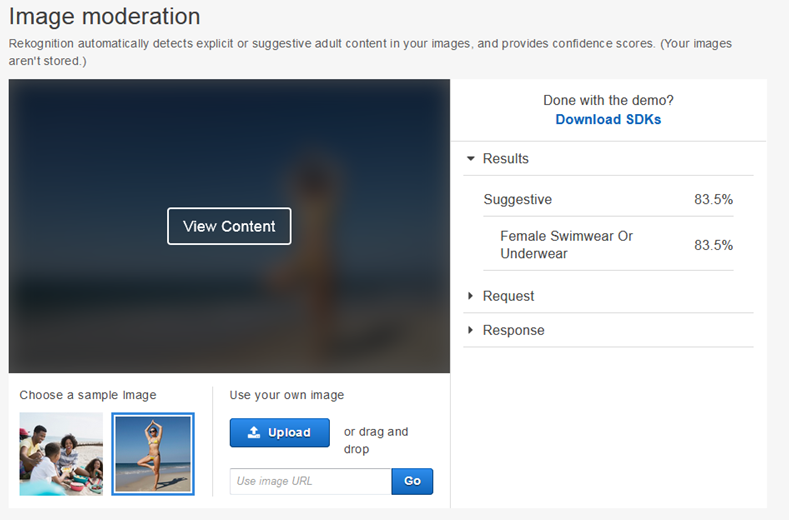

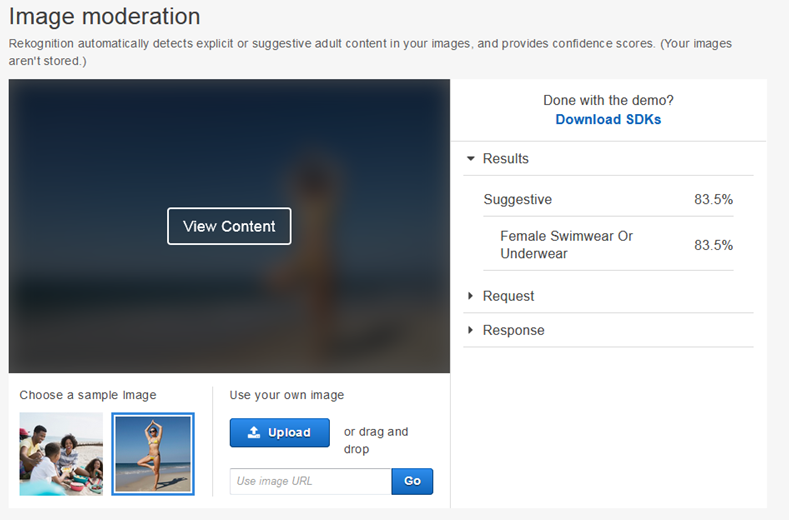

Artificial Intelligence (AI) can be used to moderate content thanks to Machine Learning (ML) tools like Amazon Rekognition. Whether it’s for social media, broadcast media, advertising or e-commerce, Amazon Rekognition’s APIs will detect inappropriate or offensive content in images and videos.

A two-level hierarchical taxonomy is used to label categories of inappropriate or offensive content:

| TOP-LEVEL CATEGORY | EXPLICIT NUDITY | SUGGESTIVE | VIOLENCE | VISUALLY DISTURBING | RUDE GESTURES |

|---|---|---|---|---|---|

| Second-level category |

|

|

|

|

|

Taxonomy continued…

| TOP-LEVEL CATEGORY | DRUGS | TOBACCO | ALCOHOL | GAMBLING | HATE SYMBOLS |

|---|---|---|---|---|---|

| Second-level category |

|

|

|

|

|

Not only do these categories cover a wide range of what might be considered inappropriate or offensive, they afford a great deal of flexibility too. For example, you can set your own rules for each category to show or censor content for specific countries. Amazon Rekognition will also provide you with a confidence score for each piece of content detected.

Benefits of using ML for content moderation

– Protect brand

Some brands don’t want to be associated with certain types of content, such as violence or alcohol. ML content moderation can filter out unwanted associations using rich metadata, which can also be used to protect users against bad actors who share damaging or misleading content against your brand.

– Scale coverage

Human moderators alone cannot scale to meet the growing needs of content moderation at sufficient quality or speed. This can result in a lack of compliance, a poor user experience and a tarnished brand reputation.

– Improve safety

In addition to protecting users from potentially harmful and upsetting content, you can also provide assurances to advertisers that your brand and platform is 100% safe.

– Protect people

The psychological effects of viewing harmful content is well documented, with reports of moderators experiencing post-traumatic stress disorder (PTSD) symptoms and other mental health issues. This can be significantly reduced by adopting an ML model instead.

– Value for money

ML models can deliver a 95-99% reduction in the amount of content that humans moderators need to review. This will enable them to focus on more valuable activities while still delivering extensive content moderation at a fraction of the cost.

– Social listening

Content moderation is an extremely effective resource for social listening, customer sentiment and how your brand is being perceived. This has a direct impact on consumer decisions.

In spite of the benefits that ML-powered content moderation can provide, it is not a silver bullet. Any form of automated solution is best used to augment human decision making when identifying and triaging content.

Use cases for ML content moderation

The use cases for automated content moderation are extensive when you consider the numerous ways in which consumers interact and engage with brands, organisations and their peers online. Essentially, any platform where users contribute text, images, video or audio is suitable for content moderation.

The most common use cases include:

- Social media – Protecting users from inappropriate content on photo and video sharing platforms, gaming communities and dating apps.

- Broadcast media – Assigning the right content and compliance ratings to third party videos in accordance with regional market standards and practices.

- Marketing and advertising – Preventing content that is unsuitable or unsafe for a brand to advertise against. This can include images, videos and podcasts.

- Gaming – Preventing content such as hate speech, profanity and bullying from being broadcast to users.

But given the greater need for content moderation in today’s self-service online world, even more industries stand to benefit too, including:

Online retailers

Despite the fact online retailers have complete control over their own product listings and descriptions, customers are often encouraged to upload and share their content for:

- Product reviews – In addition to the potential for offensive images, customers may also use inappropriate language in their product reviews, which could reflect badly on the brand and impact sales.

- Refunds & exchanges – Some organisations have processes in place where customers are required to upload images of a product they’ve bought to receive a refund or exchange. In this instance, content moderation would protect and safeguard customer service staff.

- Chatbots – Another example of where content moderation could increase the well-being of employees is with chatbots, where harmful and abusive messages from customers would be filtered or blocked.

Forums and communities

The vast majority of online forums are typically moderated by volunteers or subject matter enthusiasts. But there are instances where organisations establish their own online forums to foster a sense of community between customers and increase brand awareness.

Once again, ML content moderation can be used to assist human beings in ensuring posts and pictures meet community guidelines, especially if the brand’s products are a central theme of the forum.

Classifieds and marketplaces

As well as ‘traditional’ classified listing sites where people can sell their prized possessions or unwanted items to others, the gig economy has given rise to a number of alternative online marketplaces. Examples include renting out your home to holidaymakers and ‘selling’ your own skillset such as helping someone assemble flatpack furniture.

But when it comes to posting a listing or advertising on these sites, much of the content moderation is still done by humans. What’s more, issues with product photos or service descriptions are typically only reported after they’ve been live for some time, potentially exposing several users to inappropriate content.

ML can help prevent content that is unsuitable, controversial or even illegal from being uploaded and published. As a result, listings won’t violate compliance policies.

How content moderation works with Amazon Rekognition, Transcribe, Comprehend and Augmented AI

Amazon Rekognition uses fully managed image and video moderation APIs to proactively detect inappropriate, unwanted or offensive content. However, it can also work in parallel with Amazon Transcribe, Amazon Comprehend and Amazon Augmented AI for an all-encompassing content moderation solution. Here’s how they all work:

- Amazon Rekognition – Allows you to quickly review millions of images or thousands of videos using machine learning, and flag only a small subset of assets for further action. Moderation labels are organised in a hierarchical taxonomy, from which you can create granular business rules for different geographies, target audiences, time of day and so on.

- Amazon Transcribe – Converts speech to text and checks this against your own list of prohibited words or phrases. This can be used to ensure customer service staff are not exposed to offensive or inappropriate voice messages.

- Amazon Comprehend – A natural-language processing (NLP) service that enables you to uncover information in unstructured text, such as entities and sentiments. This helps automatically moderate things like support tickets and forum posts.

- Amazon Augmented AI – Providing the ability to implement human review to audit results, improve low-confidence predictions and make final decisions.

The above solutions are great out-of-the-box options that enable you to get started quickly with ML content moderation. For more accurate and powerful results, you’ll need to build something more bespoke around them to meet your unique needs.

The future of content moderation will be driven by AI and ML

The amount of user-generated and third-party content being published online shows no sign of slowing down. But for social media networks, online forums, gaming communities, photo sharing websites and dating apps, reviewing this content to ensure end users are not exposed to inappropriate or offensive material is time consuming, expensive and potentially harmful to human moderators.

But thanks to AI and ML, it’s possible to improve safety for users, protect moderators, streamline operations and save money with automated workflows. Get in touch with the team at DiUS to find out how we can help implement a solution that meets the moderation needs of your business.