Successfully transition your Machine Learning proof of concept into a production-ready model

There’s no doubt that Machine Learning (ML) is here to stay. Our recent ML Report found that over 82% of Australian organisations are interested in applying ML, both for internal and external facing applications.

Most organisations start with a Proof of Concept (POC) because it’s a great way to create a lightweight version to test the technology and processes before committing to additional budget, resources and organisational changes. Sounds ideal, but there’s a reason why the term “POC Purgatory” is being used more often these days! In fact, our report found that only 21% of organisations have actually moved past this stage and put at least one ML project into production.

Why is ML so hard?

Applying ML in a real-world business context is still relatively new and its project management practices are less mature than those in software development. Furthermore, there are more complexities involved in ML projects due to the experimental nature of model building; the result of the model is never 100% perfect and the effort required to achieve the desired performance is often unknown, therefore making project planning more difficult.

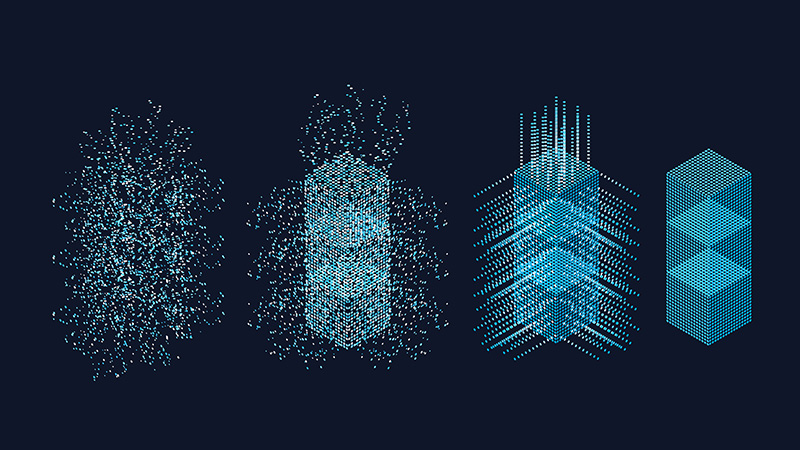

ML requires different toolsets, infrastructure and workflows. This involves being able to understand how to frame the ML problem, training high-performing models, and selecting the right performance metrics, and finally, deploying and productionising the model — all within a much broader business context. Since there are many dimensions to a successful ML project, we usually separate the concerns in such a way that the ML model aspects of the project are tackled in a POC, and then in MLOps, before going into production.

Here’s what we think are the top 5 ML-specific skills needed to deliver a successful ML POC:

- Knowing how to frame an ML problem with the available data

- Analysing the data to make sure it’s prepared and ready for ML

- Investigating whether to train a custom model or use an off-the-shelf ML service

- Training the ML model effectively

- Evaluating the model and eliminating bias

1. Knowing how to frame an ML problem with the available data

Framing the ML problem is impacted by three important factors:

- the overall business problem you’re trying to solve,

- what data you have available or need to help train the model to solve all or part of the problem, and

- whether ML is a suitable solution to help solve the problem.

Understanding what needs to be predicted and the potential outcomes that come out of that is critical, and then leads to figuring out what data and what models can be explored to solve the problem. If not framed correctly, you can easily set up a POC that doesn’t achieve its objectives because the data and approach can’t solve the problem that needs to be addressed.

Here's a client example:

A teleradiology provider wanted to classify incoming referrals to prioritise brain scans for urgent analysis. So the problem they needed to solve was how to work out whether a scan was of a brain or some other body part, and whether ML could do this with the data available.

Unlabelled scans were available with their associated radiology reports, but no radiology resources were available to label the scans as “brain” or “not-brain”. So the problem needed to be reframed to focus on obtaining labelled images first. By re-framing the problem, we had to use different data and models to help solve the problem.

DiUS developed a simple NLP model to process a radiology report and predict whether the associated image was of the brain or not. This was used to label a collection of images from which a computer vision model could be trained.

2. Analysing the data to make sure it’s prepared and ready for ML

The data that powers ML is just as important as the models themselves. ML algorithms learn from data; finding relationships and making decisions from the training data they’re given. The better the training data is, the better the models perform. However, data requirements for ML are very different to those from traditional business operations, and doing ML well requires you to understand the difference.

ML uses data to determine statistical properties, often combining data from different sources and then trying to predict patterns in data that’s not yet seen.

Professor Neil Lawrence from University of Cambridge, has identified three levels that need to be considered:

- Accessibility: Does it exist? How do I access it? Can I ethically use it?

- Fidelity: How was it collected? Was it aggregated? How accurate is it?

- Context: Is it suitable for my purpose? For example, consumer behaviour data collected during pre-pandemic times might reflect a particular set of priorities which may make them totally unsuited for training a model to make predictions in the current situation.

Here's a client example:

A music streaming service wanted to understand whether music impacts purchasing patterns by patrons in shopping venues. They were using two datasets: playlists and transactions, however DiUS found that the number of patrons at time of playing wasn’t measured. This critical data was needed, so DiUS recommended ways to complete the missing data by measuring patronage.

3. Investigating whether to train a custom model or to use an off-the-shelf ML service

Most cloud-providers now offer Machine Learning as a Service (MLaaS) tools which are “out of the box” software, and include predictive analytics, data pre-processing, model training and tuning, orchestration and model deployment. Examples include:

Here's a client example:

One of our clients in the insurance industry had trained a model for their computer vision use case using Azure custom vision (AutoML) service. This component had been selected by the client since it required limited knowledge of ML, however it did not achieve the level of performance required. After analysing their data, DiUS implemented a custom model for their use case and exceeded their custom vision model benchmark by more than 20% in accuracy.

- AWS: Textract, Rekognition, Forecast, Lex, Polly, Transcribe and Translate

- Google: Text to speech, speech to text, DocumentAI, video/image intelligence AI, vision OCR, natural language, and recommendation AI

- Microsoft Azure: Knowledge mining, conversational AI, document process automation, translation and speech transcription.

MLaaS tools can be a great way to get started in ML, especially for more generic and less complex problems. However, they can be too generic and don’t always apply to the problem at hand, and aren’t flexible enough to adapt.

On the flipside, creating a custom model can also be challenging especially when your organisation doesn’t have the right skill sets. Most organisations find that they end up using a combination of both MLaaS and custom models – our recent ML report found about 52% of organisations are using both.

4. Training the ML model effectively

A lot of performance improvements can be made by addressing the data that the model is learning from. This might include techniques such as data augmentation, super resolution or domain transfer techniques. Feeding the right features in the right format will result in models which can solve the problem at hand in a more performant way.

This is actually more complex than it seems — sometimes you may know a model is providing some level of performance, but when you dig deeper, there can be several issues that aren’t as obvious. As an example, over/underfitting might easily be encountered in practice, and ML techniques such as hyperparameter tuning can come to the rescue:

- Overfitting/underfitting: when the complexity of the model is too low for it to learn the data that it is given as input, the model is said to “underfit”. In other words, an overly simple model can’t learn enough intricate patterns and underlying trends of the given dataset, so it won’t be as effective.

On the flipside, when the complexity of the model is too high, compared to the data that it is trying to learn from, the model is said to “overfit”. In other words, with greater model complexity, the model works really well with the training data, but does not work well with unseen data in the real world. - Hyperparmeter tuning: a hyperparameter is a model argument whose value is set before the learning process begins. These parameters control different aspects of the model, including generalisability and complexity (and hence can help with underfitting/overfitting). Setting these parameters properly requires a deep understanding of the model and data at hand, and is a complex problem. It requires a lot of time and effort from an ML specialist, or it can be delegated to automatic hyperparameter tuning tools. However, the problem with these tools is that they are computationally intensive and can burn the budget really quickly.

Here's a client example:

A smartphone device protection insurer was using ML to determine whether a device screen was damaged. The images used to train the model were collected by the repair centre, but issues arose when the images used in practice were taken by customers using their own phone cameras at home. Different lighting, angles and resolution meant that the ML model was not trained enough to identify screen damage when images were provided by customers.

DiUS created a customised ML model to ensure it was less sensitive to camera effects and incorporated additional training images using phone cameras in different locations.

In addition to overfitting/underfitting, there are many different conditions where a model cannot obtain its business objectives. However, when it comes to training a model, there are different techniques that an ML expert can rely on.

5. Evaluating the model and eliminating bias

Here's a client example:

An online retailer used content moderation tools to detect infringing artworks being uploaded to the platform which contain trademarked references. In initial assessments, they realised that the training data provided the model only contained clean and easily detectable infringements, whereas the actual images were somewhat transformed and harder to detect.

This bias in the data results in a model which is biased towards or against a few brands and hence would flag in favour or against some of the trademarks and brands differently. Although this is a less critical application than biases towards specific groups of people, the process of finding and removing them is identical. DiUS helped with providing a more inclusive set of training images to alleviate the issue and improve the detection accuracy.

The way you train ML models can often include unintended biases such as excluding data that you think is irrelevant to the model or collecting data that doesn’t accurately represent the complete environment the program is expected to run into. This is evident in the client example shown here about only using clean and easily detectable images in the model instead of those that are harder to detect.

Real life prejudices can also cause significant impact on the success of an ML solution, leading to a poor customer service experience, reduced sales or illegal and unethical outcomes. There have been many publicised examples of how unintended bias impacts the effectiveness of an ML model, such as racial profiling when determining predictions in crime, gender bias in the hiring and loan processes, as well as facial recognition based on skin colour.

It’s important that data should be representative of the full picture. Organisations developing the algorithms should shape data samples in a way that minimises algorithmic and other types of ML bias, and decision-makers should evaluate when it is appropriate, or inappropriate to apply ML technology.

Moving beyond the POC

Despite only 21% of respondents having one or more models in production, Australian businesses are seeing business results from an investment in ML. The majority, 81%, of respondents with one or more models in production have experienced successful business outcomes. So it’s definitely worth the effort!

Complimentary two-hour ML workshop

We can help you successfully transition your ML POC into a production-ready model. To help you work through your POC issues, we can offer you a complimentary two-hour workshop with a DiUS Machine Learning Consultant to understand the business problem, and review and recommend strategies to help improve an existing Machine Learning-powered solution.

DiUS was the first organisation in Australia and New Zealand to obtain the AWS Applied AI Competency so we have proven experience in delivering ML-powered solutions and helping organisations get out of POC purgatory.

We can help you think beyond the specific pain point, uplift your capabilities and build the skills you need to move forward.