Tech giants are hungry for Artificial Intelligence (AI), and the related spending is geared up for a major boom – 2017 is set to be a big year for AI. Recently we had the opportunity to undertake a project to demonstrate how to develop an application by leveraging Rekognition; a cloud AI service powered by AWS.

First of all, let’s take a look at some current offerings:

- AWS: Amazon launches new AI services for developers; Image recognition, text-to-speech, Alexa NLP.

- Google’s DeepMind, AlphaGo: Following the 4-1 in the matches with Lee Sedol, an updated version has compiled a 60-0 record against some of the game’s premier players, including the world No.1 champion.

- IBM Watson: A great win in Jeopardy when competing with human players.

- Microsoft Oxford, Azure: The new collection of machine learning offerings is provided to developers as part of Microsoft’s Azure portfolio.

- Facebook face recognition: Facebook opens up its image-recognition AI software to everyone with the aim of advancing the tech so it can one day be applied to live video.

- Alipay from Alibaba: Facial recognition is used for its online payment solution.

In this blog post, we will be focusing on the AWS’s image recognition service: Rekognition.

What is AWS Rekognition?

Amazon Rekognition is a service that makes it easy to add image analysis to your applications. With Rekognition, you can detect objects, scenes, and faces in images. You can also search and compare faces. Rekognition’s API enables you to quickly add sophisticated deep learning-based visual search and image classification to yourapplications.

Excerpt taken from https://aws.amazon.com/rekognition/

AWS Rekognition is a relatively new AWS service (launched at AWSReInvent 2016) that uses Deep Learning (read the cleverness of deep learning by Daryl Wilding-McBride to detect and label thousands of objects and scenes in your images. It is one of the three AI based services on the AWS platform, the other two are Lex for rich conversational service and Polly for turning text into lifelike speech.

Why are we looking at it?

Facial recognition technologies are trending as more and more innovative business ideas and cost saving solutions are emerging.

Let’s take a look at several real use cases from around the world.

Gauging customer sentiment

Customer feedback is always important, and usually customer response is emotional rather than rational. A great use case is analysing faces in focus groups – marketers can watch how people react viscerally to products, and use that feedback to make decisions on product features and branding.

- A comedy club in Barcelona is experimenting with charging users per laugh, using facial-recognition technology to track how much they enjoyed the show.

- Amscreen analyses the age and gender of shoppers at checkout counters and delivers them targeted advertising by using cameras mounted at supermarket checkout stands.

Attendance and Security Solution

- Spot shoplifters by matching against an existing gallery of alleged offenders and notifying a loss prevention associate on their mobile within seconds (by Walmart).

- Attendance and site access control in construction (provided by AURORA)

Online payment

Facial recognition technology is a hot research topic in online payment domain.

- E-commerce giant Alibaba Group and affiliated online payment service Alipay are aiming to use facial recognition technology to take the place of passwords.

Art

- An astonishing video released by CGI artist Nobumichi Asai and his team shows how depicting real-time ‘digital makeup’ projected onto a model’s face. https://vimeo.com/103425574

How does it work?

AWS Rekognition service is a cloud service, powered by deep learning. Rekognition service users don’t have to build, maintain or upgrade deeplearning pipelines. While enjoying the benefits and focusing on the high-value application design and development, all the complex computer vision tasks are done at the cloud end.

Workflow diagram of a typical use cases:

Above is a typical workflow using AWS Rekognition:

- A live picture is taken by a camera device (e.g. in-store camera or webcam from laptop).

- The picture taken is sent to AWS Rekognition cloud service via the web or mobile application developed. We can either directly send the bytes of the picture, or save into S3 first and then let Rekognition retrieve the S3 object.

- A JSON-format response will be returned from the cloud containing the analysis data.

- With the given analysis data, the application can either display the result straightforward or perform further reporting analysis.

The currently available features:

- Face analysis, along with confidence scores.

- Face Attributes:

- Smiling or not, eyes are open or not, with or without mustache etc.

- Face position by a rectangular frame and landmark points such as left eye, right pupil, nose, left corner of the mouth, right eyebrow etc.

- Emotion analysis: Happy, confused or sad.

- Gender recognition: Male or female.

- Age detection: an age range, e.g. 35-52.

- Pose: Yaw, Roll, Pitch.

- Face Attributes:

- Face comparison – The value to measure the likelihood that two facial images are of the same person and the confidence score to help evaluate the match.

- Face recognition – Among a face collection (user needs to create their own), the Rekognition service will search the collection and find the likely matched ones along with the confidence score.

- Other object and scene detection – Not only faces, but also thousands of others objects, such as bicycle, sunset, pets, furnitures.

A Simple Rekognition Application

Figure 1: DiUS Rekognition Study Demo Workflow

In our experiment, we built a simple application to detect faces from input images using the Rekognition API. As illustrated in Figure 1, the picture was uploaded to the POC application and sent to AWS Rekognition for analysis.

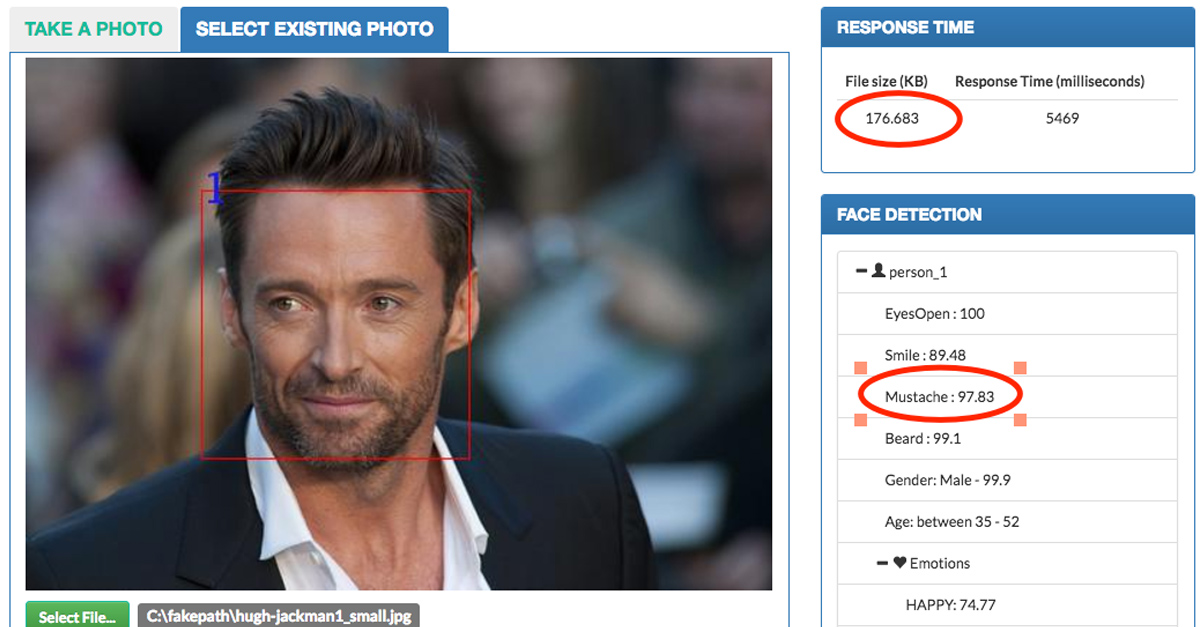

The input images can be captured using a webcam or select an existing one from the local computer. The webcam allows us to capture photos in fair quality (roughly 250KB) and instantly test the Rekognition service with different emotions (see Figure 2). The “Select Existing Photo” isreally handy if you want to go with existing images, especially with big files which cannot be generated by the webcam.

Figure 2: DiUS Rekognition Service Demo App

The snippet below uses AWS SDK for Python to call the Rekognition API with the assumption that your AWS credentials are setup and configuredsuccessfully. For more information about AWS credentials configuration, see boto3configuration.

Rekognition provides two ways to retrieve face details for an input image:

- Using a physical photo on local drive. The photo will be transformed to byte array before submitting

base64\_img = "data:image/jpeg;base64,\\\\\\\\\\*/9j/4AAQSkZJ…" img\_blog = base64.b64decode(base64\_img) rekognition = boto3.client(‘rekognition’) response = rekognition.detect\_faces(Image={'Bytes':img\_blog }, Attributes=\[‘ALL’\]) - Using a file from an S3 Bucket. This will be useful when you have your photos already on S3.

s3\_object = {'Bucket': 'dius-rekognition-sandpit', 'Name':'image\_file'} rekognition = boto3.client(‘rekognition’) response = rekognition.detect\_faces(Image={'S3Object': s3\_object}, Attributes=\['ALL'\])

Attributes parameter (DEFAULT/ALL): decides what facial attributes will be included in the response. The default value is DEFAULT and onlyreturns limited attributes such as BoundingBox, Confidence, Pose, Quality, and Landmarks. If you provided ALL, all attributes are returned but the operation will take longer.

For each face detected, the operation returns face details including a bounding box of the face, a confidence value, and a fixed set ofattributes such as mustache, gender, smile, etc. The following JSON response returns face details for one person with associated attributes:

{

"FaceDetails":[

{

"Confidence":99.99974060058594,

"Eyeglasses":{

"Confidence":99.99929809570312,

"Value":false

},

"Sunglasses":{

"Confidence":99.59443664550781,

"Value":false

},

"Gender":{

"Confidence":99.92845916748047,

"Value":"Male"

},

"Emotions":[

{

"Confidence":40.27277374267578,

"Type":"CONFUSED"

},

{

"Confidence":29.206937789916992,

"Type":"SAD"

},

{

"Confidence":5.873501300811768,

"Type":"ANGRY"

}

],

"AgeRange":{

"High":43,

"Low":26

},

"EyesOpen":{

"Confidence":73.00408172607422,

"Value":true

},

"Smile":{

"Confidence":97.41291809082031,

"Value":false

},

"MouthOpen":{

"Confidence":99.83804321289062,

"Value":false

},

"Quality":{

"Sharpness":99.884521484375,

"Brightness":34.98160934448242

},

"Mustache":{

"Confidence":99.68995666503906,

"Value":false

},

"Beard":{

"Confidence":99.8032455444336,

"Value":false

}

}

]

}

Rekognition Experiment

We carried out experiments with a couple of factors that affect the Rekognition API result. The following section outlines the experimentresults – what Rekognition does well and what it does not.

What Rekognition Does Well

Low Resolution Images

Although Rekognition accepts images that are 80 x 80px, for the best result, the image should be 640 x 480px. We did a few tests with the same image using different resolutions and experienced some of the attributes not being detected in the lower resolution image. Figure 3 show a high resolution image which retains all facial attributes. However in Figure 4, Rekognition cannot detect the mustache in the lower resolution image.

Figure 3: High resolution image 640 x 480 retains all facial attributes

Figure 4: Low resolution image 130 x 93 cannot retrieve Mustache attribute

Low-light Images

Rekognition does a good job with low-light images. All exposed facial elements are detected and returned by Rekognition. However, it doesn’treturn attributes for facial elements which are not visible by the human eye, which makes sense. In Figure 5, Rekognition returned all visible facial elements correctly.

Figure 5: Facial attributes displayed correctly in low-light image

Accurate In Some Attributes

Rekognition works well with two facial attributes, namely Age Range and Gender. Throughout all experiments we tried, these attributes always returned accurate values.

Performance

Here is a simple benchmark performed using us-west-2 region, which it is the nearest AWS region from Melbourne with Rekognition available at the moment. FileX_Small and FileX_Large are the same files under different resolutions.

| Size (K) | Rekognition read pic via S3 | ||

|---|---|---|---|

which uploaded from our App | Time (S) | ||

| File1_Small | 8 | N | 2.37 |

| 8 | Y | 3.91 | |

| File1_Large | 529 | N | 7.18 |

| 529 | Y | 7.96 | |

| File2_Small | 7 | N | 2.34 |

| 7 | Y | 3.31 | |

| File2_Large | 220 | N | 4.89 |

| 220 | Y | 6.14 | |

| File3_Small | 10 | N | 2.24 |

| 10 | Y | 3.16 | |

| File3_Large | 2400 | N | 6.41 |

| 2400 | Y | 12.69 |

From the above table, sending picture bytes directly to Rekognition will save some performance cost in total. For a picture taken by a typical webcam on laptop which has a 200-300K size, you will feel around 4-6s latency before the result came back.

What Rekognition is not good for

Obscured Images

Rekognition does not seem to work with obscured images. The facial attributes are incorrect in most of images we tested. In Figure 6, themustache, beard and smile attributes are incorrect. The emotion confidence scores are low as well.

Figure 6: Facial attributes display incorrectly in obscured images

Emotions

Rekognition works well with happy faces image as facial attributes returned correctly most of the time. However, it does struggle to detect images with sad emotions. Figure 5 shows an image with sad faces but only person two is detected correctly. Person one’s emotion is surprised, while person three is angry, which is incorrect.

It is a Black Box

Although the API works, it does not give us flexibility when it comes to operation parameters. The detect faces API only allows us to pass image type and only two attribute parameters. We cannot fine-tune what attributes are to be returned by the API. What if I want the API toreturn emotions only?

Figure 7: Facial attributes display incorrectly in obscured images

The underlying model cannot be trained

Rekognition is built with Machine Learning concepts in mind. One of the important features of Machine Learning is to ‘train data’, which enables a machine to learn and helps it get smarter. Unfortunately, Rekognition does not yet allow us to train a model with a new set of data.

Conclusion

Generally speaking, the AWS Rekognition service is fairly easy to use and with pretty powerful functionality. Based on its current abilities, normal developers with no deep-learning knowledge can easily and quickly use it for a business-related project, for example analysing the age and gender of shoppers at checkout counters and delivery targeted advertising.We believe there will be a growing number of business applications based on AWS Rekognition service in the future.