We’ve all been flooded with posts, tweets and discussions on the use of ChatGPT, and how it can be used in many industries especially for legal and marketing activities. While there are probably as many critics as embracers of the technology, I thought I’d take a different view and explore how ChatGPT could be used to further drive customer value to existing products.

Let’s start with ChatGPT vs GPT-3

Before we go any further, there are a few things that we need to address as they definitely stopped me in my tracks when I was doing my research. There appears to be a little confusion in some of the articles that I read between ChatGPT and GPT-3 and, in some cases, the terms are being used interchangeably. They are definitely not the same, and can set you down the wrong path, as I personally experienced when trying to understand how ChatGPT could be leveraged within product development.

So, let’s clear this up! ChatGPT and GPT-3 are both language models developed by OpenAI, a US-based AI research and deployment company. GPT-3 is short for Generative Pre-trained Transformer 3, and it’s OpenAI’s third generation language model, whereas ChatGPT is a smaller variation of GPT-3, and is purposefully designed to operate as a Chatbot.

ChatGPT has only just become available with Application Programming Interface (API) and is currently in Beta. Previously, ChatGPT was only available via web interface and could not be used for commercial use because it didn’t have API to connect it with other applications.

The closest thing to it was using the GPT-3 API. However, GPT-3 works very differently from ChatGPT. As an example, if I asked GPT-3 to give me the best chocolate to buy, it might suggest a brand. If I then followed up with another question, such as “how much does it cost?” it wouldn’t understand the context or my initial question, and would give me a generic response such as “It depends on what you are asking about.” In comparison, ChatGPT works in the conversational sense, so that any follow-on questions are considered in context with the previous question. This allows for a dialogue flow, and it would know that I was asking how much the chocolate brand costs.

OpenAI has only just released the ChatGPT API, which now makes it available for commercial consumption. Both Shopify and Snap have been quick to jump in, both announcing new product features to be released utilising the new API. I’m sure we will see a flurry of announcements over coming days, weeks and months. According to OpenAPI, the new ChatGPT API can be used to do things such as write code, draft content, answer questions, create chat agents, translation tasks and many more.

Experimenting with ChatGPT

I decided to experiment and play around with the ChatGPT API to see what sort of problems it could solve across different industries.

Product subscription options

An example of how it could be used is with product subscriptions because most products offer some sort of subscription whether it be a digital or a physical product. I often find it hard to read between all the plans to find the one that is right for my needs. Could ChatGPT be used to help make decisions on what plan best suits me?

What if we built a user interface that allowed the user to put in their needs so it could then provide suggestions? As an example, it could be used on the Spotify pricing page with some sort of user interface that prompted me for my needs. I could type something in like, “What is the best plan for a family of five?”

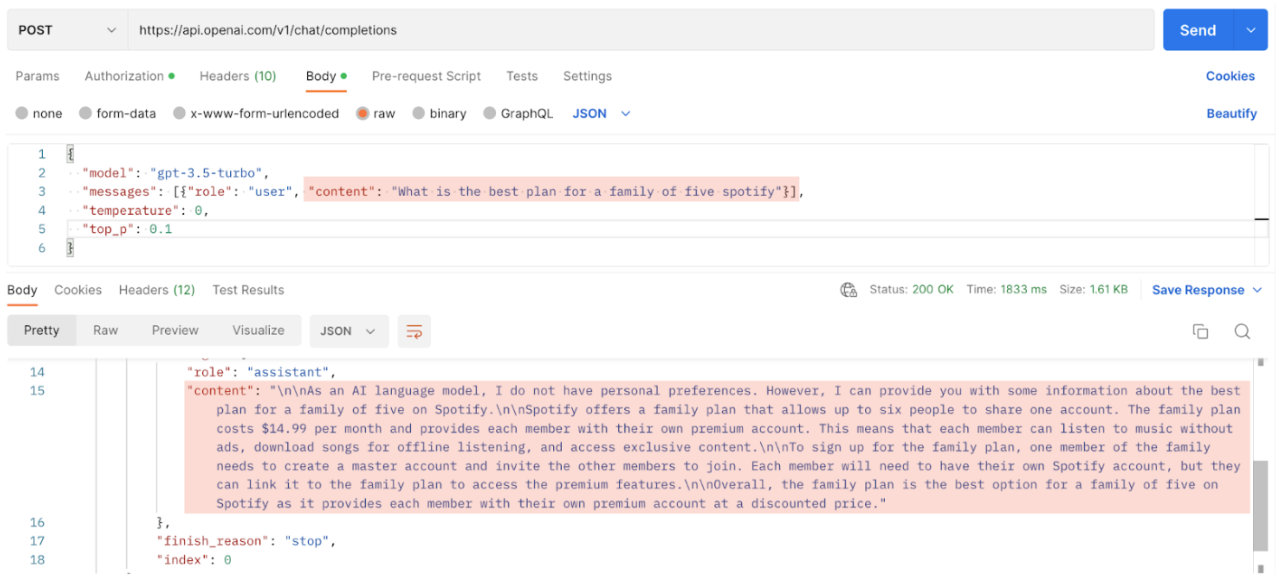

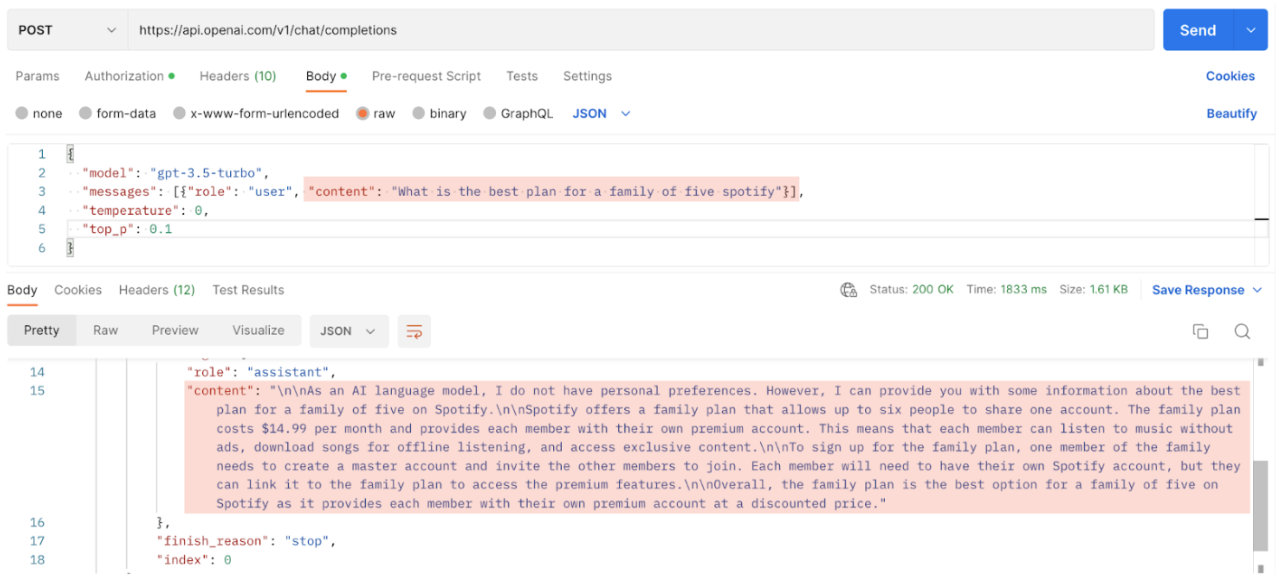

You would need to manipulate this to ensure that the response is tailored to your product brand and does not provide an alternative. I ran an example of this using Postman (a platform used for building, using and testing APIs). Now, the response was pretty good. It suggested the Family plan and told me it could be used for six members and provided a price.

Here is an example showing Spotify plans:

Travel advice

Another example where ChatGPT could be useful is within a travel application, which can make suggestions on where to go based on seasonality and budget. As an example, I made a request for a travel destination for ten days, for two people on a budget of $10,000. I did not specify the time of year or the currency.

As a result it gave me a number of suggestions such as Bali, Costa Rica, Greece, Japan (to name a few) as excellent travel destinations for ten days with a budget of $10,000 for two adults, and listed some of the activities I could do.

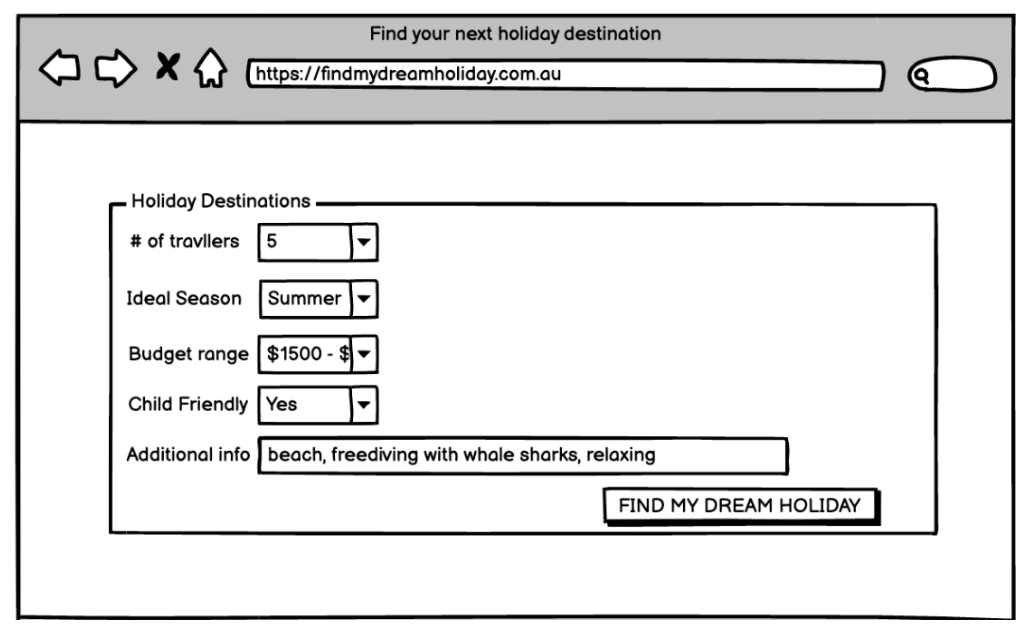

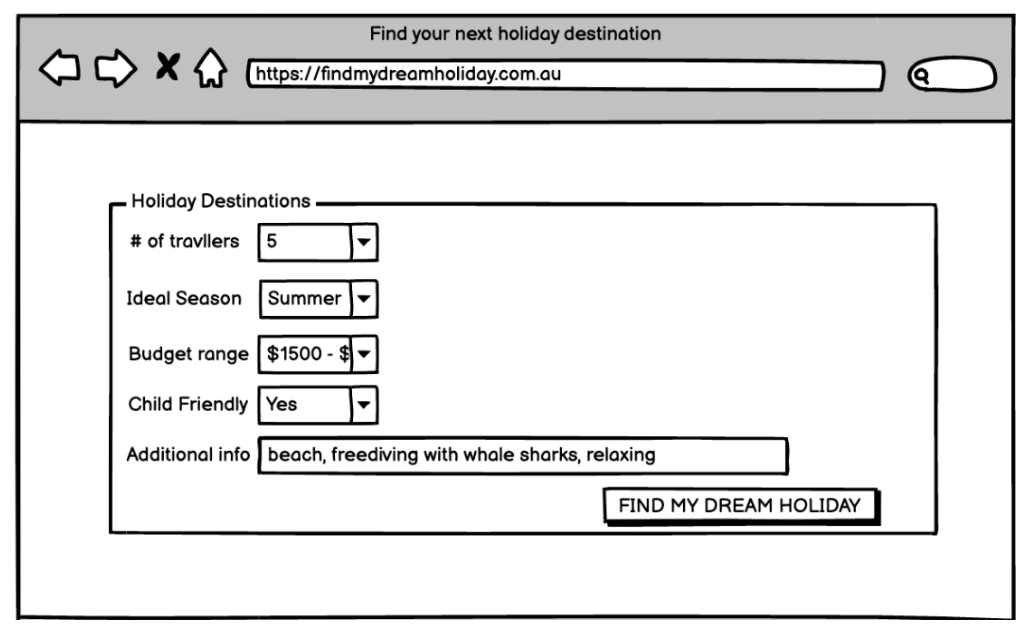

I’m not sure how accurate this is as there are a few pieces of information that aren’t factored in, such as currency and my departure location, but not a bad place to start researching my next holiday destination. You would definitely need to give it more information to help make more relevant suggestions. It is also important to note that Generative Pre-trained Transformer models are not designed for such use cases as making recommendations.

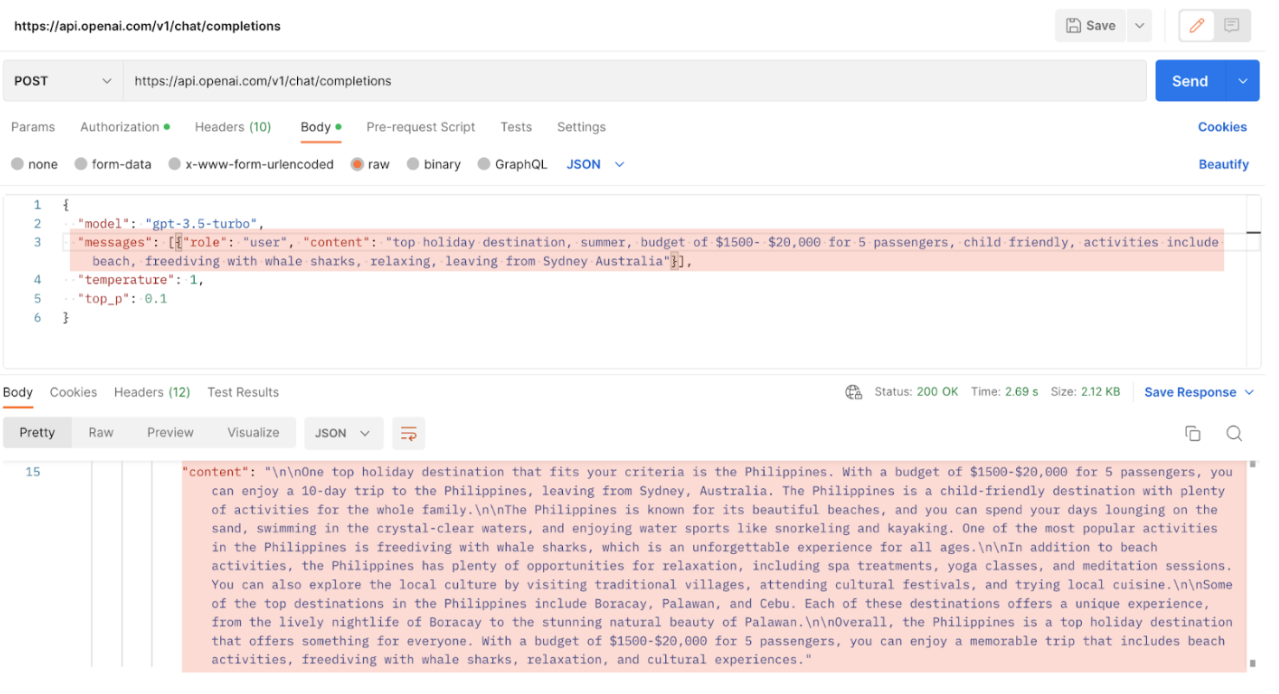

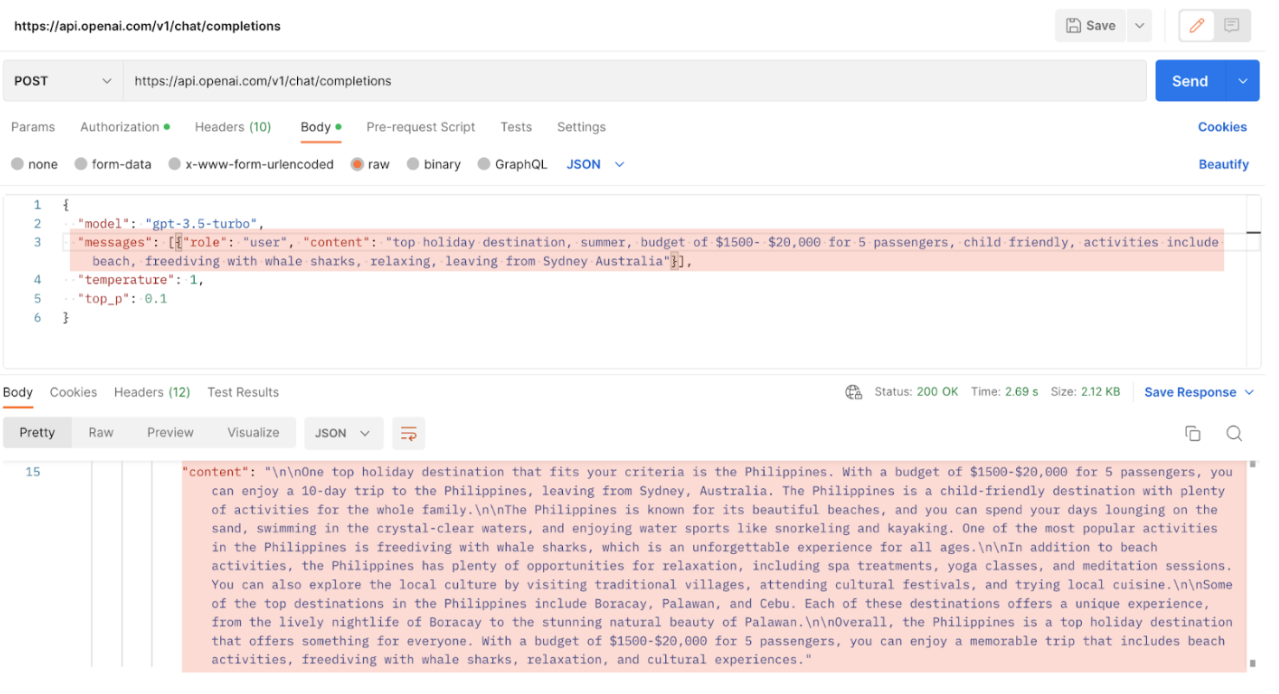

Here is an example of what a user interface that can handle more custom and specific input parameters that meet the user’s needs could look like:

In this case, I was able to specify that I wanted a holiday that was child-friendly and offered free diving with sharks, and that I was departing from Sydney Australia. It provided a great starting point for me to start planning my next holiday to the Philippines.

Machine learning can be most effective for a product or application where the model has been pre-trained on your own data or data that is highly relevant with your offering. With all these examples this is just using the data that the GPT model has been trained on, and in a commercial application it might not be the best fit.

Unfortunately, fine-tuning is not currently available with the ChatGPT API, it is available with GPT-3. Fine-tuning allows you to train the model on your own data, which makes for a much richer product suggestion experience that can be further controlled to ensure relevancy.

Potential issues to consider

There are some drawbacks and issues that need to be taken into account before going ahead and using ChatGPT within your product. These are:

- Relevance: At the time of writing, the ChatGPT API has only just been released and it’s not clear what its knowledge cut off period is. I assumed that it was trained on the exact same data model as the web interface version of ChatGPT. I did ask it some questions regarding time and date events, which made it clear that the model was trained on data up to 2021. In the example of Spotify, the prices are definitely not current and it is not clear for what region the prices were listed for. So, it’s important to consider things such as regions if your product is offered across different locations and I would suggest you handle this by providing a few additional parameters within the request.

Prompt engineering can significantly help provide more relevant and useful output. The concept is focused on the structure of the input with clear instructions. Your product should provide some good examples of prompt engineering to help your users get the best results.

- Algorithmic Bias: This can occur when the data used to train the model is not a fair representation, and could result in responses that are, for example, racist or sexist. Although not so evident in my examples, without knowing what data has been used to train the model that provides these responses, I can’t rule out bias in the results. The API also includes a “logit_bias” parameter that can be used to either increase or decrease the selection of certain words using tokens.

- Misinformation: ChatGPT has been trained on large sets of data from various sources such as Wikipedia and Commoncrawl. With such large public data sets used to train, it’s easy to see how incorrect information could be displayed. Some of this information could be both damaging and harmful.

- Nonsensical Responses: ChatGPT can provide nonsensical responses that need to be carefully considered. Thankfully the API provides a couple of controls that can be sent in the request that help minimise such responses. The input parameters are temperature and top-p. They are both optional parameters that can be provided in the request, if not provided they default to 1. It is recommended to alter one of these input parameters but not both.

Temperature deals with the randomness of responses, this can be dialled down to 0 which will provide a more consistent response, while dialling it up to 2 will lead to more random responses. Top_p can be used to control the probability of predicting the next word as an example. If it is set to 0.1 then it will filter to the top 10% of words that are likely to come next.

- Commercial Risk: Most products have a specific tone of voice, so responses will most likely not match up with your brand’s tone, but can be avoided with disclaimers, and could be used on a subset of users for testing purposes.

Another important factor in this scenario is the potential for a competitor to be suggested, so this would require adding parameters in the request to minimise this risk. Post Processing can be used to help filter out undesirable results such as words and potentially filtering responses that could remove certain words, such as competitors as an example. - Intellectual Property: In the context of product suggestions and recommendations, I would suspect that this would be a minimal risk given the training data was in the public domain. However, it’s an important consideration, as there is a chance that copyrighted material may come back as a response.

- Privacy: With the release of the ChatGPT API, OpenAI has made some changes to its data usage policies. OpenAI will retain API data for 30 days for the purposes of monitoring abuse and misuse. It claims that data submitted will not be used to train and improve its models, however users can opt-in to share data for this purpose. These data policies are strictly for the use of API consumers.

There are techniques and tools that could be used to help mitigate this, such as privacy detection tools and ML models. For a lean approach for your experimentation you could use regular expressions that would run before the request is sent to the OpenAI API. Regular expressions also known as Regex that can find patterns in strings of text can detect things such as credit card numbers and addresses. - Cost: At the time of writing, the gpt-3.5-turbo Language Model, which is used by the ChatGPT API costs $0.002 per 1k tokens. Presumably this is in USD. 1k of tokens is roughly 750 words. The token usage relates to the number of words sent in the request and returned. This can be somewhat controlled in the request. As per OpenAI’s API documentation you can specify “max_tokens” which limits the response back.

In addition to some of the mitigations mentioned above, Microsoft has put together a number of toolkits (for Engineers, PMs and Experience Designers) to help with the responsible use of AI, which touch on these very issues. These toolkits can be found here.

So what’s next?

It’s exciting to see what the potential benefits and utilisation of ChatGPT can bring. It is still quite early days, and I am sure we will see many improvements over the coming months. We may even see emerging versions for specific use cases such as MarGPT (used for Marketing), AdGPT(used for advertising), MedGPT (used for medical) and many more. It will be interesting to see whether fine-tuning will also be made available to the ChatGPT API as that could really lift its use within a product with a much richer trained model.

ChatGPT and GPT-3 might be all the hype right now, but there are a myriad of other AI opportunities to help you stay ahead and grow your product. While all these new technologies can be very exciting and tempting for a product manager or a product team to jump onboard with, it should be used to help solve a problem or introduce new opportunities. Like any other product development decision, it’s essential that you can define and measure the value it brings.

Stay tuned for our next blog that covers other capabilities of GPT-3 and other large language models.